FlexAI News

When developers scale LLM workloads to production, there's one question that always comes up: Which GPUs should I use, how many will I need and how much is this going to cost me? Not an average or back-of-the-envelope guess: real numbers that reflect the latency you need, the model you’re running, and the traffic you expect.

It’s a deceptively simple question, but the answer depends on a multitude of factors:

This is only made more complicated by the fact that most infra calculators assume uniform conditions that don’t match real-world inference and don't offer a solution to compare the various possibilities at hand.

Sizing for LLM inference is more nuanced than just checking if your model fits in memory. Model architecture (dense vs MoE), token throughput, concurrency, and the quirks of GPU memory bandwidth all shape your real-world capacity. Even the most detailed spec sheets and calculators (like those from VMware or NVIDIA1) can’t fully capture the dynamic nature of production traffic—burst loads, variable prompt sizes, and shifting latency requirements.

FlexAI’s Inference Sizer is a developer-first tool that translates your workload parameters into actionable GPU requirements, factoring in the details that matter in production. No sign-up walls, no black-box estimates. Just clear, model-aware answers.

Inference has gone from a research afterthought to a core infra problem. Developers need answers to very practical questions:

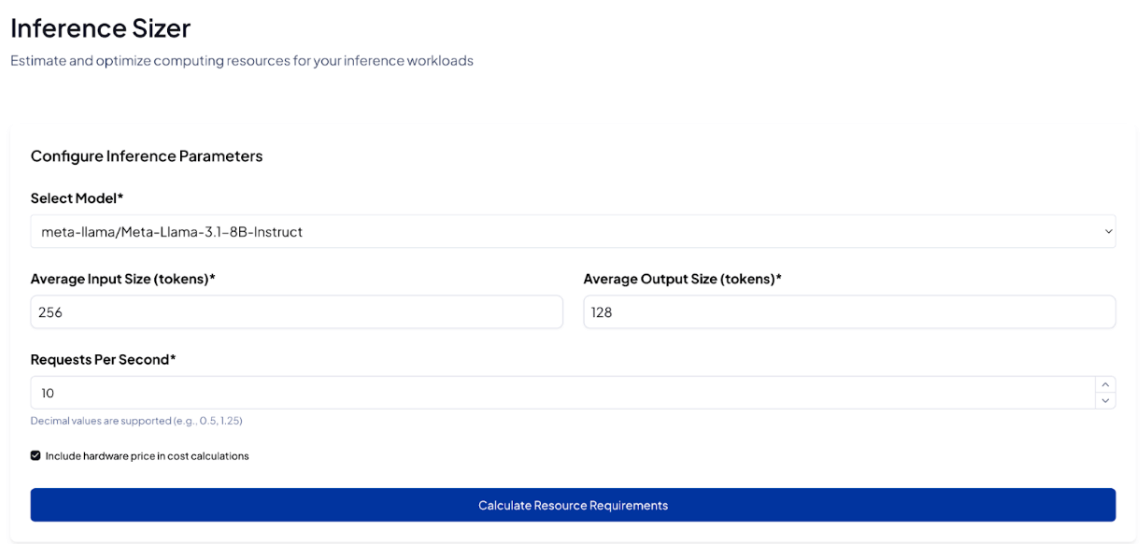

The FlexAI Inference Sizer answers these by letting you specify your LLM (from HuggingFace), input/output token sizes and volume of requests per second. The tool is based on internal benchmarking done with FlexBench, our internal tool built to publish SOTA MLPerf results. It simulates real-world behavior and with FlexAI´s auto-scaling capability (in beta), you just need to know your maximum number of requests per second and the calculator will output a deployment-ready GPU configuration. You can adjust for cost optimized output, latency optimized, TTFT optimized, etc.

Let’s say you’re building a production-ready internal chatbot using Meta’s LLaMA 3.1 8B Instruct model. Your expected workload:

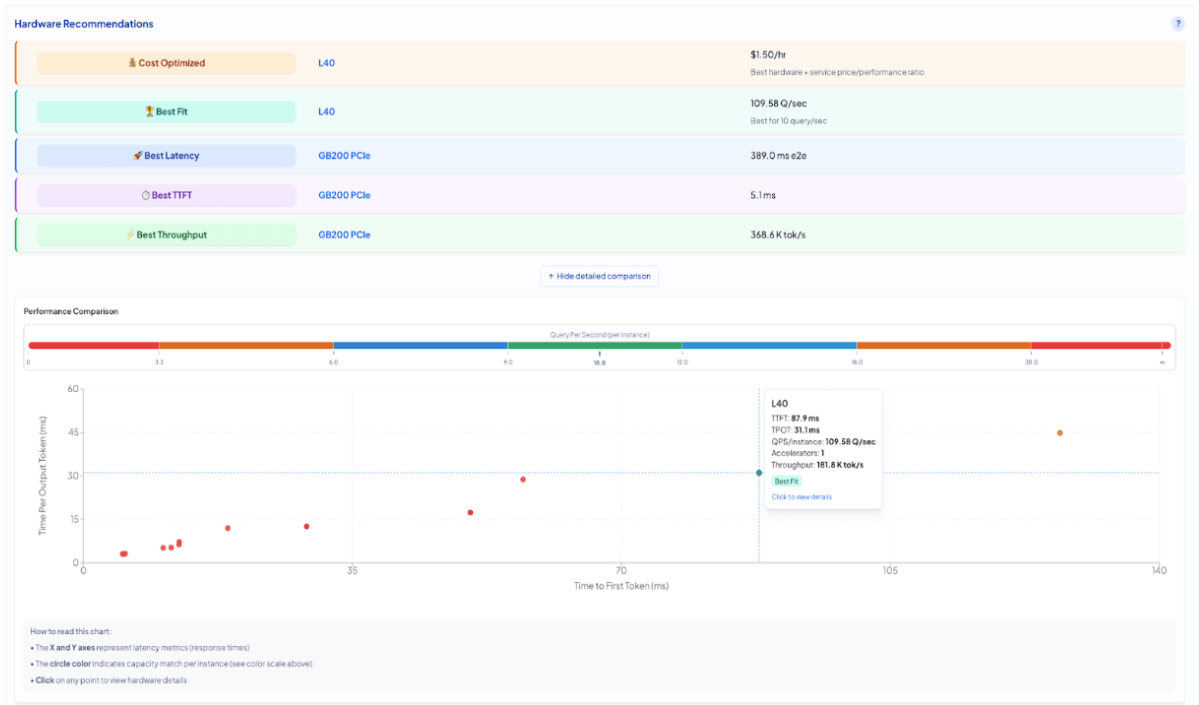

When you hit Calculate Resource Requirements, here’s what you get:

This setup is cost-efficient and sufficient for internal tools or moderately interactive applications.

However, if you're optimizing for faster response times, the Sizer lets you compare:

Let’s say your chatbot generates a 128-token response:

That’s about a 250 ms difference end-to-end. In a chat context, where tokens are streamed, this difference is rarely noticeable. Users perceive responsiveness from how quickly the first tokens arrive, not how long the last ones take.

The H200 can be 30–40% more expensive depending on your cloud provider and runtime. For an internal chatbot:

Whether you’re building for chat, RAG, summarization, or interactive UI flows, the FlexAI Inference Sizer helps you right-size your infrastructure: balancing cost, latency, and throughput in one clear view.

Deploy your model with one-click using free credits from FlexAI to benchmark your workload before committing or just try the sizer by signing up.

FlexAI bridges the planning-to-deployment gap that often slows down AI teams.

Most AI infrastructure platforms promise scale, but few deliver true flexibility or developer autonomy.

FlexAI’s Workload as a Service solution was designed from the ground up by engineers from Apple, Intel, NVIDIA, and Tesla. FlexAI abstracts away infrastructure management with autoscaling, GPU pooling, and intelligent placement—so your models get the resources they need, when they need them.

FlexAI solves the real bottlenecks of modern AI deployment: infra complexity and fragility, underutilized compute, and a lack of transparency into costs.

Serving LLMs shouldn't require guesswork. Whether you're a startup experimenting with open-weight models or a platform team pushing to meet SLA requirements, FlexAI’s Inference Sizer gives you the numbers you need—and the ability to act on them.

We´re not stopping here. In the coming months, we will add more models to this sizer (including Diffusion Models), turn it into a full fledged co-pilot functionality to use as you launch your inference workloads. Finally, expect to see the same for fine-tuning and training. Stay put!

Need help or want to compare setups? Join our Slack or drop us a line at support@flex.ai.

You’ll get GPU estimates, alternative configs, and the ability to spin up endpoints instantly.

Better yet, every sign-up comes with $/100 FlexAI credits so you can test out your deployments.

To celebrate this launch we’re offering €100 starter credits for first-time users!

Get Started Now